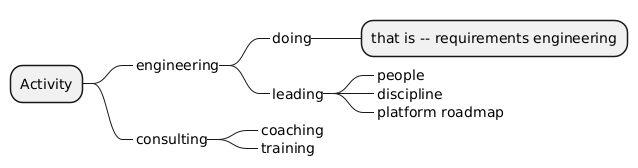

I keep drawing conceptual models by hand – even in published documentation e.g. medical device technical files. Some colleagues have kindly attempted to enlighten me about how inefficient and poor engineering practice it is. In this post I’ll go through the reasons why I think it makes very much sense. But first, my practice.

How

- I draw on a white sheet of paper

- Which I scan to an image file

- Which I upload in the target digital tool e.g. Confluence or Polarion.

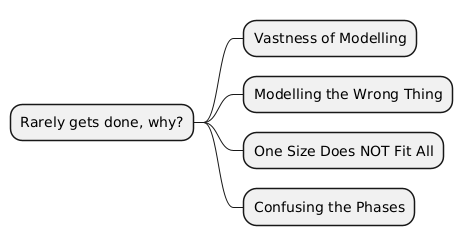

Let’s now have a look at the reason against it.

Drawbacks of hand drawn diagrams

- painful to maintain – small changes of an existing hand drawn model are heavy

- poor version control – I ended up storing the latest paper version of my diagram in case I’d need to change it later

- poor embedding in a digital toolchain – e.g. not searchable for text – with the consequence that consistency is based on manual effort and error prone.

Why would I nevertheless keep publishing hand drawn diagrams?

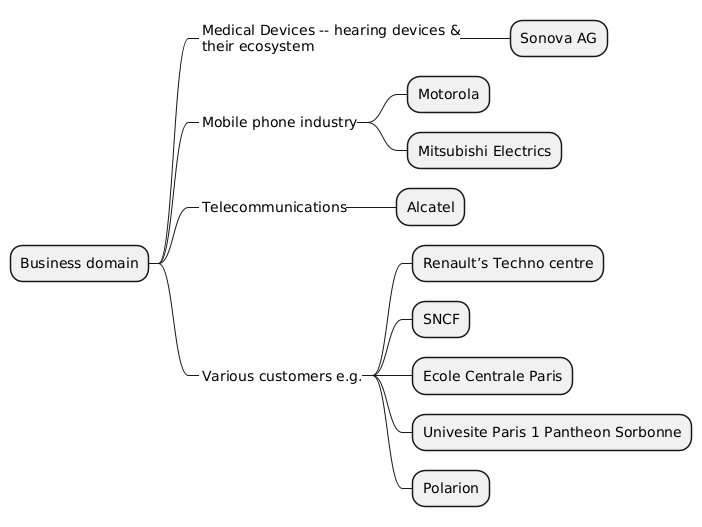

A counter example

Sparx Systems Enterprise Architect is a “visual modelling and design tool based on the OMG UML“ (Wikipedia). The image above is from the company home page (https://www.sparxsystems.de 19.06.25, same pixel density), that is, I have not been tricking this tool into generating a poor visual rendering. I however have chosen Enterprise Architect as I have experienced how this tool can deliver poor visuals.

Now – do you see what I dislike in this image ?

Its rendering is not readable. Not by lack of screen real estate, the tool just seems to not intend the content to be read. I am puzzled both by the inability of the tool to produce readable content, and by the persistency of my fellow engineers to render conceptual models with this tool. And also by Sparx Systems marketing team decision to illustrate their tool with this unreadable image. I’ll contact them and try to understand.

Below my rendering as an illustration of what is missing above. Same content (well what I could decipher), same poor resolution and same screen real estate (actually my version is smaller):

From a Sensemaking perspective, a major difference.

Let us now dive into the reasons why using a digital tool may contribute to poor conceptual modelling.

Benefits of drawing conceptual models by hand

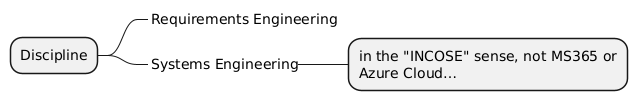

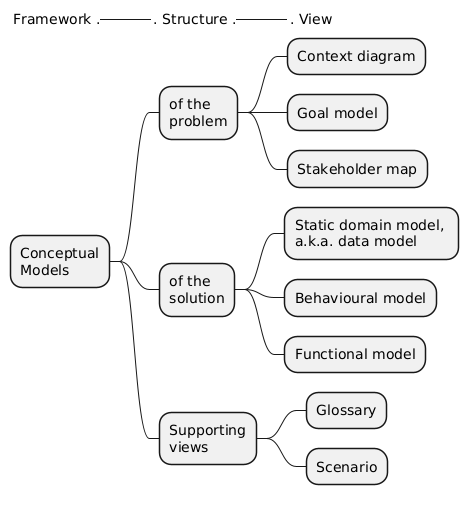

In Why Conceptual Modelling? I’ve explained that the purpose of conceptual modelling is Sensemaking – getting engineering stakeholders make sense of the World and the Machine as they interact. Building up on this paradigm, here the benefits of drawing conceptual models by hand:

- By getting rid of the technical constraints of the tool, drawing by hand allows the modeller (you) to focus on the essence – sensemaking among people. As shown above, you want to facilitate the reading of the diagram – text and notation easy to read, modelling items organized logically, clear flow – and you want to get rid of distractions.

- Drawing by hand forces the modeller (you) to get it right. Although I never exceed an A4 page, such a drawing is slow and requires effort – so you better get it right the first time. This has two consequences: 1- you are led to invest the time you need to capture the essence so that you do not have to change it later; and 2- you’re more focused and dedicated for each pen stroke. As reported by architects, hand drawing requires presence and attention, as there is no “undo” on your pen and each pen stroke is valuable. In my experience, hand drawn diagrams live longer.

- By removing the constraints of the digital tool, drawing by hand makes you think better. With the two previous points, one could think modelling is the projection of a pre-existing mental construct onto a sheet of paper, a “snapshot of your brain”; and drawing by hand helps you do it better. Well, there is more to it. Drawing is an act of thinking. As is the case for writing, your thinking, ideas and concepts emerge from the act of drawing. The mental construct which was pre-existing gets vastly improved and strengthened by the act of drawing. As a consequence, drawing constraints of the digital tool become thinking constraints and hinders your design. I claim that, by removing the constraints of the digital tool and reducing the distance from your mind to the paper, drawing by hand not only allows you to better represent your thinking, but it improves your thinking and it makes you design better.

Here a few concrete examples: simplifying, grouping, revealing. Constrained by the limited size of your sheet of paper, you’re forced to simplify and abstract the elements of your diagram, but also shorten their designation. While you represent the relations between items, you need to group them – where some useful abstraction may emerge. Thirdly, some implicit or hidden ideas may emerge from the two dimensional structure, for example a visual gap in the symmetry which reveals a gap in your thinking. - Drawing by hand is an excellent learning tool. Once you’ve learned to get rid of distractions and to get it right the first time (as above), you can replicate your intended rendering on digital tools – and you know how to choose the tools which do not excessively get in the way of your thinking.

- Your drawing skills can be used live in meetings on flip-charts or white boards. With real people, analog. Which enables real time collaboration on an emerging diagram – therefore collective learning and sharing, that is, Sensemaking.

- It lowers the threshold to cognitive appropriation from the reader. A hand drawn diagram, for example in a requirements specification document, conveys the message “I have invested effort in drawing this image by hand because I care and I think it matters to you“. I have experienced how this human focus of the diagram positively influences how the reader invests cognitive energy into making sense of the diagram. A bit like the difference between writing an email and sitting around a coffee together. This is serious stuff: we’re talking about aligning and sometimes inventing concepts. In short, new concepts hurt – up to the moment when they become obvious. You need to remove any obstacle to this cognitive appropriation and learning.

Summing it up:

Examples

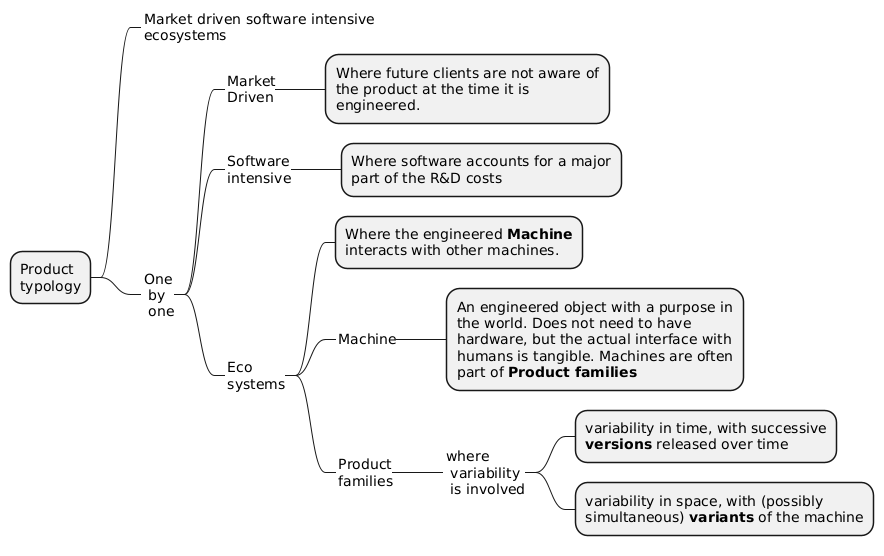

With Plantuml

Plantuml is an excellent tool which generates diagram from a text script. Here our example – first the text script, then its rendering:

@startuml

vehicle <|-- bicycle

bicycle *-- "2" wheel

@enduml

(feel free to try with https://editor.plantuml.com/uml ).

Text based modelling has many benefit e.g. seamless integration into existing change control tools.

You however have very little control over the graphic rendering. As example here, some parts of the diagram waste screen real estate – as the large “C” icons and the horizontal bars.

As you’ll see on the last comparison image below, the relative size of the text is poor with regards to the actual diagram vertical size.

With draw.io (now “diagrams.net”)

draw.io (now “diagrams.net”) is a is a cross-platform graph drawing software application which I’ve learned to use as alternative to Microsoft Visio.

As diagrams.net is not a modelling tool but a drawing tool, it allows me to precisely render what I want – with fewer disturbances and constraints as in modelling tools. As illustration, the result above is very similar to my initial hand drawn diagram. diagrams.net is my preferred diagram drawing tool when I do not draw by hand (and often once I have first sketched my diagram by hand).

If I had blindly used draw.io’s preset UML objects I might have ended up with the overloaded result below – which illustrates my points: learn first to draw it by hand and focus on what matters.

Wrapping up

Hand drawn conceptual modelling is an excellent conceptual modelling practice , as it:

- allows the modeller (you) to focus on the essence,

- forces you to get it right the first time,

- makes you think better,

- signals you human care about the content,

- and therefore eases the cognitive appropriation by the reader.

Try it – and share your experience with us.

(article published as https://www.linkedin.com/posts/daniellucashirtz_i-keep-drawing-conceptual-models-by-hand-activity-7343233396774469634-o8TL – comments welcome)

![[1] Y. Wand, Yair, Weber, and Ron, “Research commentary: information systems and conceptual modeling-a research agenda,” Information Systems Research, vol. 13, p. 363, Jan. 2002.](https://recognizing.ch/wp-content/uploads/2025/02/image-3.png)

![digraph G {

compound=true;

edge[dir=back];

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

World -> Goal [label="in"];

Goal -> User [label="has"];

Machine -> User [label="interacts with"];

World -> User [label="is in"];

World -> Machine [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Cross-subgraph edges

World -> Sensemaking [label="of", ltail=cluster_environment];

Machine -> Engineer [label="builds"];

}

//// Note from me Daniel: all commentary above also from the LLM. digraph G {

compound=true;

edge[dir=back];

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

World -> Goal [label="in"];

Goal -> User [label="has"];

Machine -> User [label="interacts with"];

World -> User [label="is in"];

World -> Machine [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Cross-subgraph edges

World -> Sensemaking [label="of", ltail=cluster_environment];

Machine -> Engineer [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-16.png)

![digraph G {

"World" -> "Goal" [label="in"];

"Goal" -> "User" [label="has"];

"User" -> "World" [label="is in"];

"User" -> "Machine" [label="interacts with"];

"Machine" -> "World" [label="interacts with"];

"Engineer" -> "Machine" [label="builds"];

"Sensemaking" -> "Engineer" [label="needs"];

"Sensemaking" -> "Machine" [label="of"];

subgraph cluster_0 {

label = "The Environment";

"World";

"Goal";

"User";

"Machine";

}

subgraph cluster_1 {

label = "The Team";

"Sensemaking";

"Engineer";

}

} digraph G {

"World" -> "Goal" [label="in"];

"Goal" -> "User" [label="has"];

"User" -> "World" [label="is in"];

"User" -> "Machine" [label="interacts with"];

"Machine" -> "World" [label="interacts with"];

"Engineer" -> "Machine" [label="builds"];

"Sensemaking" -> "Engineer" [label="needs"];

"Sensemaking" -> "Machine" [label="of"];

subgraph cluster_0 {

label = "The Environment";

"World";

"Goal";

"User";

"Machine";

}

subgraph cluster_1 {

label = "The Team";

"Sensemaking";

"Engineer";

}

}](https://recognizing.ch/wp-content/uploads/2025/02/image-18.png)

![digraph G {

compound=true;

rankdir=LR;

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

Goal -> World [label="in"];

User -> Goal [label="has"];

User -> Machine [label="interacts with"];

Machine -> World [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Engineer -> Sensemaking [label="needs"];

}

// Cross-subgraph edges

Sensemaking -> cluster_environment [label="of", lhead=cluster_environment];

Engineer -> Machine [label="builds"];

} digraph G {

compound=true;

rankdir=LR;

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

Goal -> World [label="in"];

User -> Goal [label="has"];

User -> Machine [label="interacts with"];

Machine -> World [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Engineer -> Sensemaking [label="needs"];

}

// Cross-subgraph edges

Sensemaking -> cluster_environment [label="of", lhead=cluster_environment];

Engineer -> Machine [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-19.png)

![digraph G {

compound=true;

rankdir=LR;

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

Goal -> World [label="in"];

User -> Goal [label="has"];

User -> Machine [label="interacts with"];

User -> World [label="is in"];

Machine -> World [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Engineer -> Sensemaking [label="needs"];

}

// Cross-subgraph edges

Sensemaking -> World [label="of", lhead=cluster_environment];

Engineer -> Machine [label="builds"];

} digraph G {

compound=true;

rankdir=LR;

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

Goal -> World [label="in"];

User -> Goal [label="has"];

User -> Machine [label="interacts with"];

User -> World [label="is in"];

Machine -> World [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Engineer -> Sensemaking [label="needs"];

}

// Cross-subgraph edges

Sensemaking -> World [label="of", lhead=cluster_environment];

Engineer -> Machine [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-14.png)

![digraph G {

compound=true;

edge[dir=back];

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

World -> Goal [label="in"];

Goal -> User [label="has"];

Machine -> User [label="interacts with"];

World -> User [label="is in"];

World -> Machine [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Cross-subgraph edges

World -> Sensemaking [label="of", lhead=cluster_environment];

Machine -> Engineer [label="builds"];

} digraph G {

compound=true;

edge[dir=back];

// Define subgraph for The Environment

subgraph cluster_environment {

label="The Environment";

style=solid;

World;

Goal;

User;

Machine;

// Edges within The Environment

World -> Goal [label="in"];

Goal -> User [label="has"];

Machine -> User [label="interacts with"];

World -> User [label="is in"];

World -> Machine [label="interacts with"];

}

// Define subgraph for The Team

subgraph cluster_team {

label="The Team";

style=solid;

Sensemaking;

Engineer;

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Cross-subgraph edges

World -> Sensemaking [label="of", lhead=cluster_environment];

Machine -> Engineer [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-15.png)

![digraph Graph {

// Define subgraph for "The Environment"

subgraph cluster_Environment {

label = "The Environment";

World [label="World"];

Goal [label="Goal"];

User [label="User"];

Machine [label="Machine"];

}

// Define subgraph for "The Team"

subgraph cluster_Team {

label = "The Team";

Sensemaking [label="Sensemaking"];

Engineer [label="Engineer"];

}

// Edges within and between subgraphs

World -> Goal [label="in"];

World -> Machine [label="interacts with"];

Goal -> User [label="has"];

User -> Goal [label="is in"];

Machine -> World [label="interacts with"];

Machine -> Sensemaking [label="of"];

Sensemaking -> Engineer [label="needs"];

Engineer -> Machine [label="builds"];

} digraph Graph {

// Define subgraph for "The Environment"

subgraph cluster_Environment {

label = "The Environment";

World [label="World"];

Goal [label="Goal"];

User [label="User"];

Machine [label="Machine"];

}

// Define subgraph for "The Team"

subgraph cluster_Team {

label = "The Team";

Sensemaking [label="Sensemaking"];

Engineer [label="Engineer"];

}

// Edges within and between subgraphs

World -> Goal [label="in"];

World -> Machine [label="interacts with"];

Goal -> User [label="has"];

User -> Goal [label="is in"];

Machine -> World [label="interacts with"];

Machine -> Sensemaking [label="of"];

Sensemaking -> Engineer [label="needs"];

Engineer -> Machine [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-22.png)

![digraph G {

// Subgraph for The Environment

subgraph cluster_Environment {

label = "The Environment";

World [label="World"];

Goal [label="Goal"];

User [label="User"];

Machine [label="Machine"];

// Edges within The Environment

World -> Goal [label="in"];

World -> Machine [label="interacts with"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

Machine -> World [label="interacts with"];

}

// Subgraph for The Team

subgraph cluster_Team {

label = "The Team";

Sensemaking [label="Sensemaking"];

Engineer [label="Engineer"];

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Edges between subgraphs

Sensemaking -> Machine [label="of"];

}

digraph G {

// Subgraph for The Environment

subgraph cluster_Environment {

label = "The Environment";

World [label="World"];

Goal [label="Goal"];

User [label="User"];

Machine [label="Machine"];

// Edges within The Environment

World -> Goal [label="in"];

World -> Machine [label="interacts with"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

Machine -> World [label="interacts with"];

}

// Subgraph for The Team

subgraph cluster_Team {

label = "The Team";

Sensemaking [label="Sensemaking"];

Engineer [label="Engineer"];

// Edges within The Team

Sensemaking -> Engineer [label="needs"];

}

// Edges between subgraphs

Sensemaking -> Machine [label="of"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-21.png)

![digraph G {

// Subgraph: The Environment

subgraph cluster_environment {

label = "The Environment";

World;

Goal;

User;

Machine;

// Edges in The Environment

World -> Goal [label="interacts with"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

User -> Goal [label="is in"];

Machine -> World [label="interacts with"];

Machine -> User [label="interacts with"];

}

// Subgraph: The Team

subgraph cluster_team {

label = "The Team";

Sensemaking;

Engineer;

// Edges in The Team

Sensemaking -> Engineer [label="needs"];

Engineer -> Machine [label="builds"];

}

} digraph G {

// Subgraph: The Environment

subgraph cluster_environment {

label = "The Environment";

World;

Goal;

User;

Machine;

// Edges in The Environment

World -> Goal [label="interacts with"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

User -> Goal [label="is in"];

Machine -> World [label="interacts with"];

Machine -> User [label="interacts with"];

}

// Subgraph: The Team

subgraph cluster_team {

label = "The Team";

Sensemaking;

Engineer;

// Edges in The Team

Sensemaking -> Engineer [label="needs"];

Engineer -> Machine [label="builds"];

}

}](https://recognizing.ch/wp-content/uploads/2025/02/image-23.png)

![digraph G {

subgraph cluster_Environment {

label = "The Environment";

World;

Goal;

User;

Machine;

World -> Goal [label="in"];

Goal -> User [label="has"];

User -> Machine [label="interacts with"];

Machine -> World [label="interacts with"];

}

subgraph cluster_Team {

label = "The Team";

Sensemaking;

Engineer;

Sensemaking -> Engineer [label="needs"];

}

Engineer -> Machine [label="builds"];

Machine -> Sensemaking [label="of"];

} digraph G {

subgraph cluster_Environment {

label = "The Environment";

World;

Goal;

User;

Machine;

World -> Goal [label="in"];

Goal -> User [label="has"];

User -> Machine [label="interacts with"];

Machine -> World [label="interacts with"];

}

subgraph cluster_Team {

label = "The Team";

Sensemaking;

Engineer;

Sensemaking -> Engineer [label="needs"];

}

Engineer -> Machine [label="builds"];

Machine -> Sensemaking [label="of"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-27.png)

![digraph G {

subgraph cluster_Environment {

label="The Environment";

World -> Grad [label="in"];

Grad -> Lisen [label="has"];

Lisen -> Machine [label="Interacts with"];

Machine -> builds [label="interacts with"];

}

subgraph cluster_Team {

label="The Team";

Sensemaking -> Engineer [label="heads"];

}

// Define nodes not in subgraphs

builds [label="builds"];

} digraph G {

subgraph cluster_Environment {

label="The Environment";

World -> Grad [label="in"];

Grad -> Lisen [label="has"];

Lisen -> Machine [label="Interacts with"];

Machine -> builds [label="interacts with"];

}

subgraph cluster_Team {

label="The Team";

Sensemaking -> Engineer [label="heads"];

}

// Define nodes not in subgraphs

builds [label="builds"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-26.png)

![digraph G {

// Define subgraphs

subgraph cluster_Environment {

label = "The Environment";

World;

Goal;

User;

Machine;

}

subgraph cluster_Team {

label = "The Team";

Sensemaking;

Engineer;

}

// Define edges within The Environment subgraph

World -> Machine [label="interacts with"];

World -> Goal [label="in"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

User -> Machine [label="interacts with"];

Machine -> cluster_Team [label="builds"];

// Define edges within The Team subgraph

Sensemaking -> Engineer [label="needs"];

} digraph G {

// Define subgraphs

subgraph cluster_Environment {

label = "The Environment";

World;

Goal;

User;

Machine;

}

subgraph cluster_Team {

label = "The Team";

Sensemaking;

Engineer;

}

// Define edges within The Environment subgraph

World -> Machine [label="interacts with"];

World -> Goal [label="in"];

Goal -> World [label="is in"];

Goal -> User [label="has"];

User -> Machine [label="interacts with"];

Machine -> cluster_Team [label="builds"];

// Define edges within The Team subgraph

Sensemaking -> Engineer [label="needs"];

}](https://recognizing.ch/wp-content/uploads/2025/02/image-28.png)